Introduction

Large Language Models (LLMs) like GPT-4, R1, and LLaMA have revolutionized natural language processing (NLP) by enabling machines to perform complex tasks such as translation, summarization, and contextual understanding of human language. These models, built using the Transformer architecture, are capable of capturing intricate patterns and semantics across vast corpora. However, their enormous size, computational demands, and energy consumption present significant obstacles to deployment, particularly in resource-constrained environments.

Model distillation has emerged as a pivotal technique for addressing these challenges, offering a pathway to compress and optimize LLMs without dramatically sacrificing performance.

This article explores model distillation applied to LLMs, covering foundational concepts, advanced techniques, real-world applications, inherent challenges, and forward-looking trends.

Overview of LLMs

LLMs are typically deep neural networks with billions or even trillions of parameters, trained on massive datasets encompassing books, websites, code, and user-generated content. The Transformer architecture, introduced by Vaswani et al. in 2017, forms the backbone of most LLMs and employs self-attention mechanisms to model contextual relationships between tokens across sequences. This approach allows the model to understand language holistically, capturing syntactic, semantic, and pragmatic nuances.

LLMs capabilities include:

- Text Generation

Coherent and contextually relevant generation of natural language text. - Machine Translation

High-quality translation between multiple languages. - Summarization

Extractive and abstractive summarization of long documents. - Question Answering

Understanding and responding to natural language questions. - Dialogue Systems

Creating interactive chatbots and virtual assistants.

Despite their power, LLMs has several challenges:

- Scalability and Deployment

Running models like GPT-4 requires GPUs with high memory capacity and intensive compute, making them impractical for real-time applications on typical devices. - Latency and Responsiveness

LLM inference can be slow, particularly when multiple requests are handled concurrently. - Environmental Impact

Training and deploying large models contribute to high carbon emissions and energy usage. - Accessibility Barriers

Smaller organizations and independent researchers often lack the resources to access or fine-tune such models.

These drawbacks have fueled the exploration of methods for making LLMs lighter and more adaptable, with model distillation at the forefront.

What is Model Distillation?

Model distillation is a process in machine learning by which a smaller model (the student) is trained to replicate the behavior of a larger and more complex model (the teacher). Originally introduced in the context of image classification by Hinton et al. (2015), the method has been successfully adapted to LLMs to enable efficient inference, better generalization, and ease of deployment in constrained environments.

The core idea of distillation is not to simply mimic the final outputs of the teacher model, but to extract and transfer its learned internal representations, including intermediate activations and distributional knowledge encoded in the output logits. This allows the student model to absorb more nuanced and generalized insights that are often lost when training from hard labels alone.

The distillation process includes:

- Soft Target Learning

The student model learns from the probabilistic output distributions (soft labels) produced by the teacher model, offering richer information about relative class probabilities. - Loss Function Composition

The training objective combines the distillation loss (typically using Kullback-Leibler divergence) and the original supervised loss (cross-entropy with ground truth labels). - Temperature Scaling

A temperature parameter is applied to soften the teacher’s output distribution, making it easier for the student to learn from subtle relationships. - Intermediate Representation Matching

In advanced forms, students may be trained to align their hidden layer activations or attention weights with those of the teacher, promoting deeper mimicry.

Benefits of model distillation include:

- Reduced computational footprint and model size

- Lower latency and faster inference

- Decreased energy consumption

- Enhanced suitability for edge and mobile devices

Technical Aspects of Model Distillation

Knowledge Distillation

Knowledge distillation remains the most widely used technique in model compression. The process often involves three entities: a large teacher model, a compact student model, and a dataset (often the same data or a related corpus used to train the teacher). The student model is trained to approximate the teacher’s behavior as closely as possible.

Soft targets produced by the teacher — non-binary class probabilities — contain “dark knowledge,” revealing how the model views class similarities. This is especially useful in multi-class classification and token-level prediction tasks, such as those found in LLMs. The student optimizes a combined loss function:

L=α⋅LCE(y,s(x))+(1−α)⋅T2⋅KL(pT(x),pS(x))

Where:

- LCE is the cross-entropy with ground truth labels.

- KL is the Kullback-Leibler divergence between teacher and student outputs.

- T is the temperature.

- α balances the contribution of hard and soft targets.

Parameter-Efficient Fine-Tuning (PEFT)

PEFT techniques complement distillation by minimizing the number of trainable parameters needed to adapt a model to new tasks or domains. This includes:

- Adapters

Modular components inserted between layers of the base model. During fine-tuning, only these adapters are updated, preserving the original model weights. - Low-Rank Adaptation (LoRA)

Introduces low-rank matrices into linear transformations within the model, drastically reducing the parameter count required for task-specific tuning. - Prompt Tuning and Prefix Tuning

Instead of updating model weights, these methods modify or prepend tokens to the input that guide the model toward task-relevant behavior.

Combining PEFT with model distillation allows student models to not only replicate general behavior but also specialize for specific domains with minimal resource overhead.

Quantization

Quantization is about representing model weights and activations using fewer bits, such as converting 32-bit floating-point numbers to 8-bit integers or even 4-bit representations. This reduces memory requirements and computational load, particularly useful for deploying models on edge hardware.

Two primary types:

- Post-Training Quantization (PTQ)

Applies quantization to a fully trained model without retraining. - Quantization-Aware Training (QAT)

Simulates quantization during training to maintain higher accuracy.

Recent innovations in quantization include:

- Block-wise and mixed-precision quantization

Applying different bit-widths to different layers or blocks depending on their sensitivity. - GPTQ (Quantized GPT)

Enables quantization of large autoregressive models like GPT without significant degradation.

Applications of Distilled LLMs

Mobile Devices

Smartphones and embedded systems benefit enormously from distilled LLMs. Examples include:

- Virtual Assistants

Light-weight versions of BERT or GPT provide contextual understanding for on-device assistants like Siri or Google Assistant. - Text Prediction

Distilled models power predictive typing and autocorrect features. - Privacy-Preserving NLP

On-device inference avoids transmitting sensitive data to cloud servers.

Healthcare

The healthcare sector requires models that can process medical records, predict diagnoses, and support decision-making while respecting strict privacy regulations.

- Clinical NLP

Tools like ClinicalBERT can be distilled and fine-tuned on electronic health records. - Diagnostics and Imaging Reports

NLP systems extract structured information from radiology and pathology reports. - Patient Interaction: Chatbots provide health guidance using lightweight, distilled language models.

Customer Service

Distilled LLMs enhance the scalability and responsiveness of customer support systems:

- AI Chatbots

Deliver fast and coherent answers without querying cloud-hosted models. - Automated Ticketing Systems

Categorize and escalate tickets based on query context. - Sentiment Analysis

Monitor and interpret customer emotions in real-time.

Edge Computing

Edge environments demand compact, low-latency solutions:

- Voice Interfaces

Smart speakers and voice assistants operate offline with distilled LLMs. - Smart Surveillance

NLP interfaces interpret spoken commands or annotations on-device. - Industrial Automation

Context-aware control systems benefit from language interfaces.

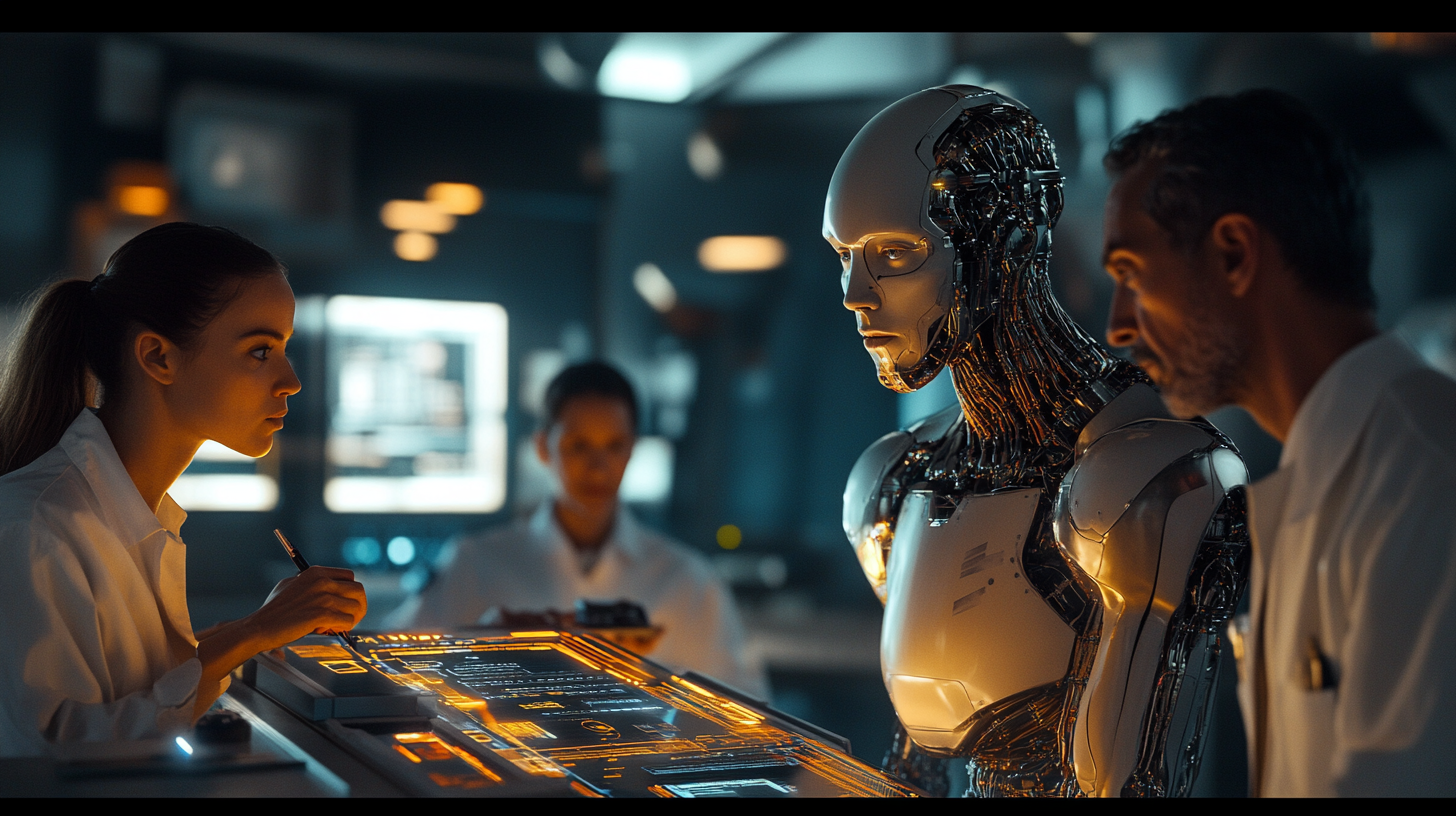

Self-Hosting

With the increasing emphasis on data privacy, sovereignty, and cost control, self-hosting distilled LLMs is becoming more popular among organizations:

- On-Premise Deployment

Companies deploy compact models internally to maintain data confidentiality and reduce dependency on third-party APIs. - Developer Tools

Local model hosting allows for customization, version control, and integration into secure pipelines without exposing internal data. - Cost Efficiency

Running distilled models on commodity hardware or smaller cloud instances lowers operational costs while maintaining acceptable performance.

Challenges and Limitations

Despite their advantages, distilled LLMs face notable limitations:

- Accuracy Degradation

Reduced capacity can lead to a decline in model performance, especially in complex reasoning or long-context scenarios. - Knowledge Gaps

Student models might miss out on some of the generalization and rare knowledge encoded in teacher models. - Lack of Robustness

Smaller models are more vulnerable to adversarial attacks and noisy inputs. - Transparency and Trust

Compression may further obfuscate internal workings, complicating explainability efforts. - Ethical Risks

Biases and toxic outputs in the teacher model can propagate or even become harder to detect in student models. - Maintenance Complexity

Keeping multiple versions (teacher and student) in sync across updates or fine-tuning efforts adds complexity.

Future Trends in Model Distillation

Hybrid Approaches

Research is increasingly exploring integrated pipelines that combine distillation, PEFT, and quantization. For example:

- Distilled-LoRA Models

Combine knowledge distillation with low-rank adaptation for specialized applications. - Quantized Adapter Distillation

Compress both the model and the inserted adapters to create ultra-lightweight models.

Multi-Modal Distillation

With the rise of models like CLIP and Flamingo, distillation is being extended to multi-modal settings:

- Cross-Modal Distillation

Student models learn from teacher models trained on both vision and language inputs. - Speech-Language Compression

Efficient assistants combine speech recognition and text understanding.

Advanced Optimization Algorithms

Emerging algorithms focus on making the distillation process more data- and compute-efficient:

- Self-Distillation

Models distill knowledge into themselves iteratively. - Online and Continual Distillation

Enables learning from streaming data or evolving knowledge bases.

Democratization of AI

Distilled models open doors for:

- Open-Source Projects

Community-led efforts like TinyLLMs and DistilBERT democratize AI access. - Academic Research

Reduced resource requirements allow broader experimentation. - Developing Nations

Local language models can be trained and deployed with minimal infrastructure.

Conclusion

Model distillation represents a critical advancement in making LLMs practical, efficient, and inclusive. As large-scale models continue to grow in capability, the need for streamlined, deployable alternatives becomes more urgent. By leveraging techniques such as knowledge distillation, parameter-efficient fine-tuning, and quantization, we can extend the reach of AI to domains and devices previously considered infeasible. As innovation progresses, the field will benefit from hybrid techniques, ethical considerations, and democratizing efforts that ensure AI remains accessible, interpretable, and aligned with human values.

Discussion