Building Resilient Systems: A Practical Introduction to Chaos Engineering and Fault Injection

Chaos Engineering helps teams simulate real-world failures—like server crashes or latency spikes — before they happen in production. By proactively testing resilience, organizations can uncover weaknesses, boost reliability, and prevent costly outages.

Why Prepare for the Worst?

In 2017, a simple command-line mistake triggered a massive AWS S3 outage that crippled thousands of websites, apps, and services. This incident wasn't just a fluke — it was a wake-up call. Modern systems are distributed, complex, and deeply interconnected. A minor glitch in one service can ripple into major outages elsewhere.

So how do we prepare our systems to survive the unpredictable? Enter Chaos Engineering and Fault Injection.

These proactive resilience strategies help engineering teams simulate real-world failures before they happen in production. The goal isn't to break things for fun — it's to uncover vulnerabilities, build confidence, and ensure business continuity under stress.

What Is Chaos Engineering?

Chaos Engineering is the discipline of experimenting on a system by intentionally injecting faults to observe how it behaves under stress. It follows a scientific approach:

- Define what "normal" looks like.

- Introduce a failure.

- Monitor the impact.

- Learn and improve.

The key idea

if you practice failure regularly, your system (and your team) gets better at handling the unexpected.

Fault Injection vs. Chaos Engineering: What's the Difference?

Both involve failure scenarios, but there's a subtle distinction:

- Fault Injection: Focuses on low-level, precise disruptions (e.g., injecting latency, CPU spikes, or dropping packets). Often used in development or test environments.

- Chaos Engineering: A broader, system-level approach that includes fault injection but emphasizes measuring systemic resilience in realistic conditions.

Think of fault injection as a tool in the Chaos Engineer's toolkit.

The Origins of Chaos: From Chaos Monkey to the Simian Army

Netflix famously pioneered Chaos Engineering with Chaos Monkey, a tool that randomly terminates production instances to test system fault tolerance. It evolved into the Simian Army, a suite of tools simulating various outages like zone failures, latency spikes, and more.

This culture of "breaking things on purpose" has since spread to organizations like Google, Amazon, and Microsoft — each developing their own internal chaos tooling.

Why Resilience Matters

Modern distributed architectures bring new challenges:

- Microservices increase complexity and dependencies.

- Cloud platforms introduce network instability and opaque infrastructure.

- CI/CD pipelines ship changes rapidly, leaving little time for thorough testing.

And the cost of downtime? Staggering.

- Amazon reportedly loses $1,093,569 per minute during outages.

- A 2019 Google Cloud outage took down YouTube, Gmail, and third-party apps.

- Financial institutions have lost millions due to transaction delays from cascading service failures.

Chaos Engineering helps teams uncover these hidden risks before they turn into headlines.

Principles of Chaos Engineering

To practice Chaos Engineering responsibly, follow these principles:

- Build a steady-state hypothesis

Define what "normal" looks like: latency, throughput, error rate, etc. - Vary real-world events

Introduce realistic failure modes: server crashes, network delays, DNS issues. - Run experiments in production or realistic environments

Don't test in unrealistic conditions. Use staging clusters that mirror production. - Minimize blast radius

Start small. Isolate failure to a single pod, node, or region. - Automate and iterate

Use automation to repeat, scale, and learn from experiments.

Types of Faults You Can Inject

Here are common categories for chaos experiments:

Infrastructure-Level Faults:

- Instance termination

- Network latency, packet loss

- Disk I/O failures

Application-Level Faults:

- Memory leaks

- CPU exhaustion

- Database timeouts

Dependency Failures:

- Third-party API slowness

- Authentication service failures

- Misconfigured DNS

Getting Practical: Chaos Mesh YAML Example

Want to inject latency into a Kubernetes service using Chaos Mesh? Here's a simple example:

apiVersion: chaos-mesh.org/v1alpha1

kind: NetworkChaos

metadata:

name: inject-latency

spec:

action: delay

mode: one

selector:

namespaces:

- my-service-namespace

labelSelectors:

app: my-app

delay:

latency: '1000ms'

duration: '30s'This YAML file tells Chaos Mesh to inject a 1-second network delay to one pod in the specified namespace for 30 seconds.

Top Chaos Engineering Tools

| Tool | Description | Platform |

|---|---|---|

| Chaos Monkey | Kills EC2 instances randomly | AWS, Netflix legacy |

| Gremlin | Enterprise chaos platform | SaaS, CLI, Kubernetes |

| Chaos Mesh | Kubernetes-native chaos framework | Open-source |

| LitmusChaos | Cloud-native chaos with CRDs & workflows | Open-source, CNCF |

| AWS Fault Injection Simulator | Controlled chaos in AWS environments | AWS-native |

Best Practices for Getting Started

- Start small. Test in lower environments with low blast radius.

- Set clear objectives. Know what you're measuring.

- Collaborate. Involve SRE, DevOps, QA, and business stakeholders.

- Monitor everything. Track KPIs, SLOs, error budgets.

- Fail forward. Every failure is a learning opportunity.

Real-World Examples: Chaos Engineering at Scale

Netflix: Chaos Monkey and other tools ensure services remain available even when regions go offline.

Google: Tests entire data center and region failure scenarios to ensure multi-regional resilience.

Amazon: Runs game-day exercises simulating large-scale disruptions to test systems and incident response.

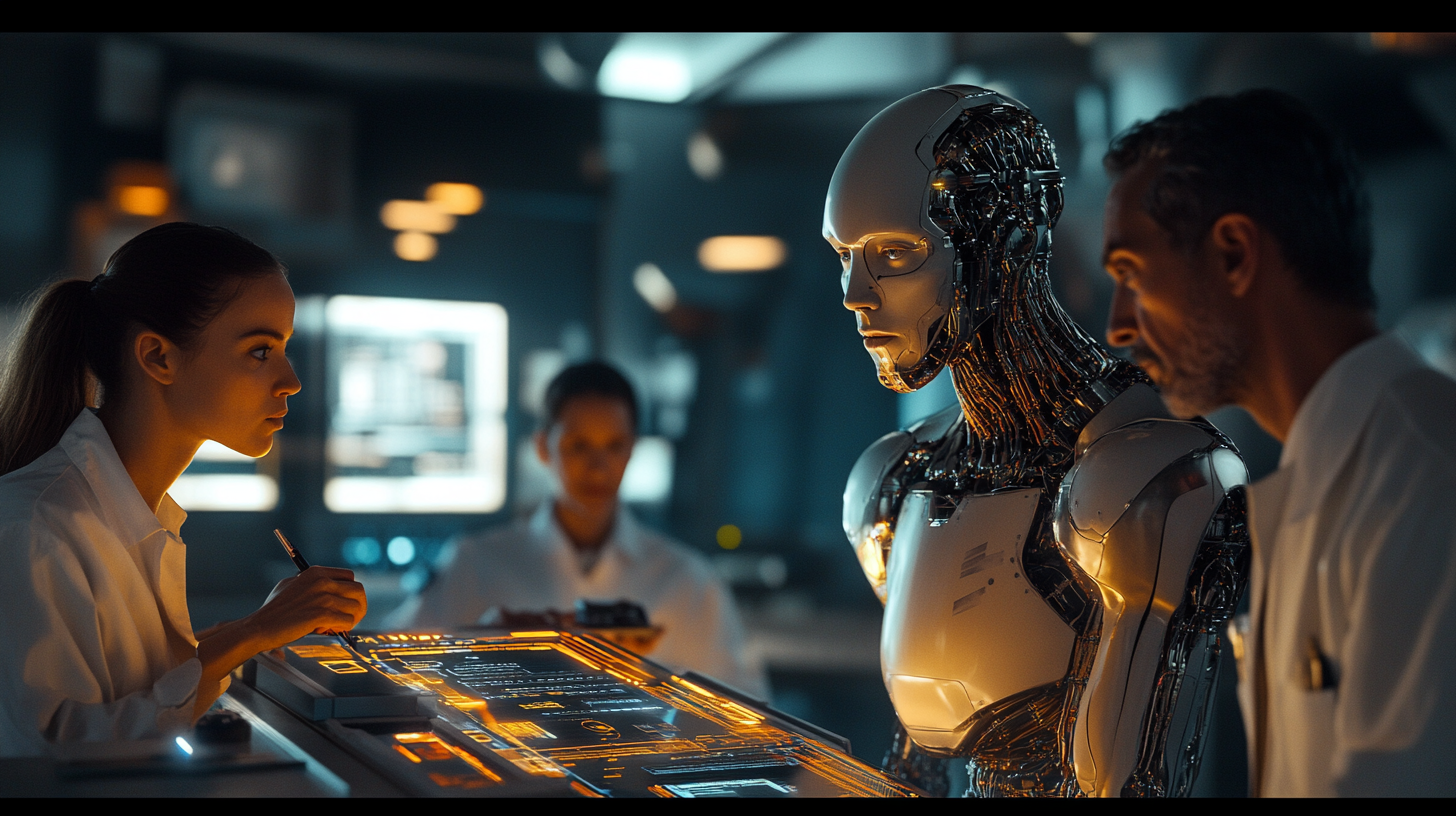

What's Next for Chaos Engineering?

The field is evolving fast. Here are some trends to watch:

- AI-driven fault prediction: Using machine learning to simulate likely failure patterns.

- Automated resilience scoring: Quantify your system’s resilience posture.

- Resilience as code: Versioned, testable chaos experiments as part of CI/CD.

Conclusion: Embrace the Chaos

Chaos Engineering isn’t about being reckless. It’s about being prepared.

By intentionally exploring failure, you build confidence in your system’s ability to recover. And more importantly, you create a culture that values resilience, learning, and continuous improvement.

Start small. Break things safely. And use the insights to build something stronger.

Discussion